This study was approved by the bioethics committee of Peking University School and Hospital of Stomatology (PKUSSIRB-201837103). The methods were conducted in accordance with the approved guidelines. The X-ray films used in this study were selected from a database without extraction of patients’ private information, such as name, gender, age, address, phone numbers, etc. All these films were obtained for ordinary diagnosis and treatment purposes. The requirement to obtain informed consent from patients was waived by the ethics committee.

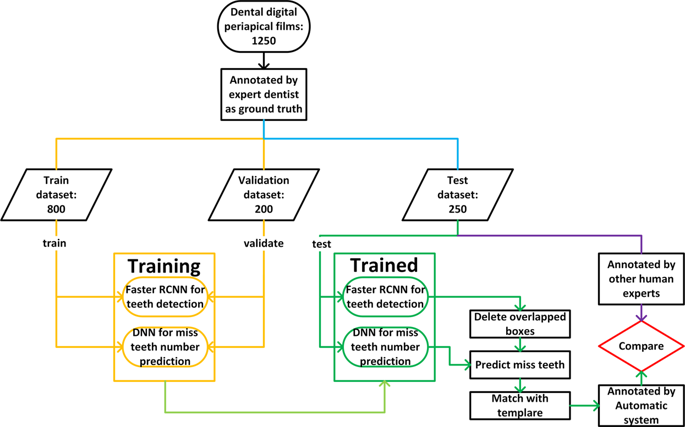

The overall work flow of this research is illustrated in Fig. 1. A total of 1250 dental X-ray films were collected and separated to form train dataset, validation dataset, and test dataset. Train dataset and validation datasets were used to train a faster R-CNN and a deep neural network (DNN). When testing, images in the test dataset were analyzed via trained faster R-CNN where teeth were detected, and missing teeth were also predicted by the trained DNN. After the post-processing procedure, images were finally annotated automatically, and then compared with the annotations by three dentists.

Image data and ground truth annotations

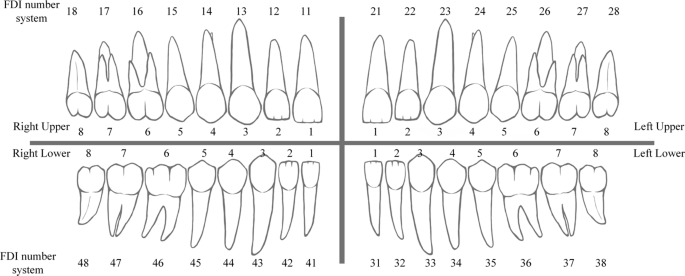

A total of 1,250 digitized dental periapical films were collected from Peking University School and Hospital of Stomatology. Each film was digitized with a resolution of 12.5 pixel per mm at size of approximately (300 to 500) × (300 to 400) pixels and saved as a “JPG” format image file with a specific identification code. These image files were collected anonymously to ensure that no private information (such as patient name, gender, and age) was revealed. Subsequently, an expert dentist with more than five years of clinical experience drew a rectangular bounding box to frame each intact tooth (including crown and root) and provided a corresponding tooth number as ground truth (GT). The Federation Dentaire Internationale (FDI) teeth numbering system (ISO-3950) was used, labeling the upper right 8 teeth as 11–18, upper left 8 teeth as 21–28, lower left 8 teeth as 31–38, and lower right 8 teeth as 41–48 (as shown in Fig. 2). When annotating, the doctor was asked to draw a minimal-size bounding box for each tooth in an image. The coordinates of the points in the image were set as pixel distances from the image’s left top corner, where the tooth bounding box could be recorded via its top left and bottom right corner points (xmin, ymin, and xmax, ymax). A tooth that was truncated at the edge of the image would not be annotated if the truncated portion exceeds 1/2 of the tooth size.

FDI teeth numbering system: 11–18 = right upper 1–8, 21–28 = left upper 1–8, 31–38 = left lower 1–8, 41–48 = right lower 1–8; 1. Central incisor, 2. Lateral incisor, 3. Canine, 4. First premolar, 5. Second premolar, 6. First molar, 7. Second molar, 8. Third molar.

The 1,250 annotated images were randomly divided into 3 datasets: a training set with 800 images, a validation set with 200 images, and a test set with 250 images.

Neural network model construction, training, and validation

An object detection tool package27 based on TensorFlow, with source code, was downloaded from github28. Faster R-CNN with Inception Resnet version 2 (Atrous version), which was one of the state-of-the-art object detectors for multiple categories, was selected as the neural network model.

The training process was executed on a GPU (Quadro M4000, NVIDIA, USA), with 8GB memory and 1664 CUDA cores. The algorithms were running backend on TensorFlow version 1.4.0 and operating system was Ubuntu 16.04.

A set of 800 annotated X-ray images was used to train the object recognition faster R-CNN. The input images were resized while maintaining their original aspect ratio, with minor dimension to be 300 pixels. A total of 32 teeth classes were required to be recognized in the X-ray images.

Mean average precision (mAP)29 was selected as a metric to measure the accuracy of the object detector during validation process, so as to adjust the train parameters. First, the detected boxes were compared with ground truth boxes, and Intersection-Over-Union (IOU) is defined as:

$$IOU=frac{Are{a}_{DB}cap Are{a}_{GTB}}{Are{a}_{DB}cup Are{a}_{GTB}}$$

(1)

where (Are{a}_{DB}) and (Are{a}_{GTB}) represent the areas of the detected box and its corresponding ground truth box. With the threshold of IOU set to be 0.5, Precision and Recall are calculated:

$$Precision=frac{TP}{TP+FP}$$

(2)

$$Recall=frac{TP}{TP+FN}$$

(3)

Where TP (True Positive) is the number of objects detected with IOU > 0.5, FP (False Positive) is the number of detected boxes with IOU < = 0.5 or detected more than once, FN (False Negative) is the number of objects that are not detected or detected with IOU < = 0.5.

For each object class, an Average Precision (AP) is defined29:

$$AP=frac{1}{11}sum _{rin {0.0,0.1,ldots ,1.0}}{p}_{interp}(r)$$

(4)

Where ({p}_{interp}(r)) is the maximum precision for any recall values exceeding r29:

$${p}_{interp}(r)={}_{tilde{r:}ge r}{}^{max,},p(tilde{r})$$

(5)

Finally, the mean average precision (mAP) is calculated as an average of APs for all object classes:

$${mAP}=frac{1}{{N}_{class}}{sum }^{}AP$$

(6)

After several attempts, the training parameters were adjusted as follows to achieve a high mAP: a batch size of 1, a total of 50000 iterations, an initial learning rate of 0.004 and then reduced to half the rate after 10000 iterations. A pre-trained model on the Coco data set was loaded as a fine tune check point. All other settings were default.

The average training time was approximately 1.1 second per iteration. The total loss dropped from 5.84 to approximately 0.03 after 50000 iterations and mAP on the validation dataset increased to a plateau of approximately 0.80.

Metrics of performances on test images

After training and validation, the model was tested on the test dataset of 250 images. The detected boxes were evaluated using certain metrics that followed clinical dental considerations.

The boxes detected by the trained faster R-CNN were compared with the ground truth boxes. Each of the Q detected boxes was paired with each of the R ground truth boxes, and the IOU of each box-pair (detected box – ground truth box) was calculated, forming an IOU matrix of dimension Q × R.

A box-pair with a value exceeding a threshold of 0.7 in the IOU matrix was considered to be a match. Subsequently, the matched box-pair element was removed from the matrix, and the process was repeated until the max IOU value was under the threshold of 0.7 or no box-pairs existed.

The matched boxes were considered to successfully detect the teeth from the background in the X-ray films. The precision and recall of teeth detection can be calculated as follows:

$${Detection},{Precision}=frac{{N}_{match}}{{N}_{DB}}$$

(7)

$${Detection},{Recall}=frac{{N}_{match}}{{N}_{GTB},}$$

(8)

Where ({N}_{match}) is the number of matched box-pairs, ({N}_{DB}) is number of detected boxes, and ({N}_{GTB}) is number of ground truth boxes. The mean IOU value of the matched boxes, defined below, represents how precise the detected boxes match with the ground truth boxes.

$${MeanIOU}=frac{{sum }^{}IO{U}_{match}}{{N}_{match}}$$

(9)

If a detected box and its matched ground truth box have the same label of a tooth number, it is correctly numbered, meaning a true positive numbering (TPN). The precision and recall of teeth numbering can be calculated as follows:

$$Numbering,Precision=frac{{N}_{TPN},}{{N}_{DB}}$$

(10)

$$Numbering,Recall=frac{{N}_{TPN},}{{N}_{GTB}}$$

(11)

Postprocessing procedures

To improve the teeth numbering results, certain postprocessing procedures were proposed.

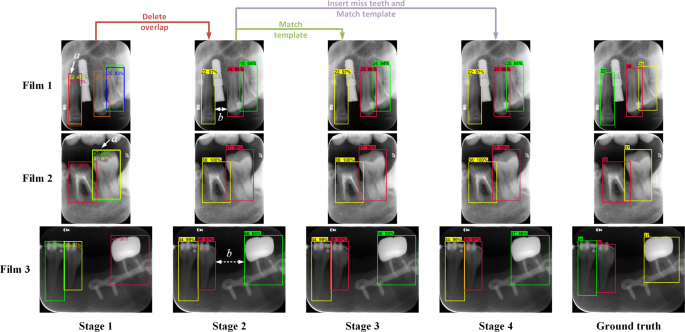

Filtering of excessive overlapped boxes

The non-maximum suppression algorithm21 had been applied for teeth box detection. The overlapped boxes with the same predicted teeth number will be sorted by their probability scores, of which the box with the maximum score will be retained and other boxes that have an IOU (with the maximum score box) larger than the threshold of 0.6 will be deleted. However, the overlapped boxes with a high IOU will not be detected if they are predicted with different numbers (Fig. 3a). To detect these overlapped boxes, IOUs of any pair of boxes in an image were calculated. When an IOU of the box-pair exceeding the threshold of 0.7 is detected, the box with a lower score will be deleted.

Examples of annotations processing after each stage: (Stage 1) annotated by the trained faster R-CNN, there were certain overlapping boxes (a); (Stage 2) after deleting the overlap boxes with lower scores, certain labels of teeth number were incorrect because the neural network confused them with other similar teeth; (Stage 3) the teeth number labels were matched with template for correction, but errors were induced when there were missing teeth (in films 1 and 3), however, the gap between adjacent teeth boxes (stage 2). (b) could be treated as a feature to predict the missing teeth; (Stage 4) after inserting the predicted missing teeth and matching with template from (Stage 2), the labels of teeth number were corrected.

Application of teeth arrangement rules

After deleting the overlapping boxes, the precision and recall of teeth numbering were still below 0.8, which might be because of the limited number of images trained in this research. However, there are certain rules of teeth arrangement that might help. For an intact dentition with no teeth missing, there are usually 16 teeth in either upper or lower dentition (Fig. 2), with a bilateral symmetry. Using the FDI teeth number system, the arrangement of teeth is numbered as follows: 18–11 for upper right, 21–28 for upper left, 48–41 for lower right, and 31–38 for lower left. Moreover, all these teeth can be classified into six categories: wisdom, molar, premolar, canine, lateral incisor, and central incisor, and teeth in the same category have a high-level of similarity, and also certain degree of similarity can be applied between different categories. These aforementioned prior domain knowledges were taken advantage of to improve the results of teeth detection.

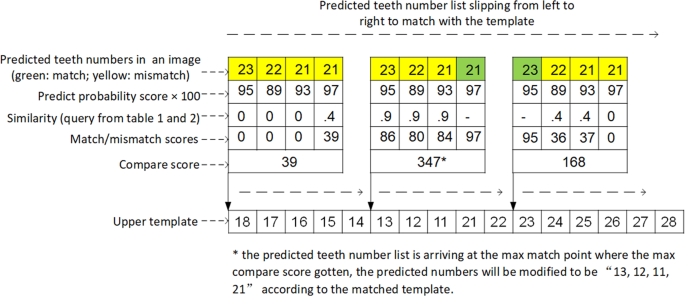

The FDI teeth number system was used as the template, which has an arrangement of “18,17,16,15,14,13,12,11,21,22,23,24,25,26,27,28” for the upper teeth, and “48,47,46,45,44,43,42,41,31,32,33,34,35,36,37,38” for the lower teeth. Because all the teeth in one X-ray image belonged to the same dentition, either upper or lower, the detected box labels should match either the upper or lower teeth template. For example, if the detected box labels in one image was “17,16,14,15,13”, comparing with the upper template, the labels “14,15” would be considered as a wrong arrangement, and it should be corrected to be “17,16,15,14,13”.

When comparing the predicted teeth number list in an image with the template, the predicted list was made to slip in the template from left to right, and a match score was calculated at each point:

$$Match,Score=sum _{Xin {x|x=T}}Prediction,Score,(X)$$

(12)

Where only the prediction score (probability score outputted by the faster R-CNN) of a teeth number (X), which matched with the template, i.e., the predicted teeth number of the detected box equals to the teeth number in the template (x = T), will be summed, as seen in Fig. 4.

Illustration of the teeth arrangement template-matching algorithm.

If the predicted teeth number does not equal to that in its corresponding template, a weight of mismatch similarity between the predicted tooth and template tooth should be applied to calculating a “mismatch score.” First, all the teeth were classified into several categories according to their appearances (Table 1). For teeth in the same dentition, the mismatch similarity matrix was set according to an expert dentist’s experience to provide the value of similarity between categories (Table 2). The mismatch score was calculated as follows:

$$Mismatch,Score=sum _{Xin {x|xne T}}Prediction,Score,(X)ast similiarity,(X,T)$$

(13)

where the prediction score was multiplied with a similarity value, which can be inferred from Table 2, according to the category of the predicted tooth number (X) and its corresponding template tooth number (T) in Table 1. Finally, a comparison score was defined to be the sum of the match score and mismatch score:

$$Comparison,Score=Match,Score+Mismatch,Score$$

(14)

The slipping label list will arrive at a most matched point where the max comparison score was obtained and the template will be used to correct the prediction number list at this point (Fig. 4).

Prediction of missing teeth

In cases of missing teeth, the scheme of the FDI system will never be matched, unless there are placeholders for the missed teeth in the predicted teeth number list. As shown in Fig. 3b, there are usually gaps between adjacent detected boxes where missing teeth existed, thus the horizontal distance of adjacent box margins is one of the key features to predict missing teeth. However, the gap of missing teeth may disappear when the adjacent teeth have a high degree of incline, where the distance of the center of the adjacent box should be considered as another key feature to predict the missing teeth.

A simple deep neural network classifier with two fully-connected hidden layers (10 neural units each) was set up. The horizontal margin distance and center distance of two adjacent boxes were used as the input features, while the missing teeth number (ranges from 0 to 3, as observed in the train dataset) was set as the label to predict. After training using the same train set of 800 images with 100 epochs, a precision of 0.981 was achieved on the validation dataset. Subsequently, the place holder “M”s were placed where the missing teeth were predicted, “17,16,M,M,13” for example, before matching with the template. The similarity of placeholder “M” with its corresponding template tooth number was set to 0 when calculating the comparison score.

Comparison with human experts and our previous fast R-CNN method

To evaluate the performance level of the developed teeth detection system, three expert dentists (A, B, and C) were invited to conduct the annotation work on the test dataset. Experts A had approximately three-year experience of observing dental periapical X-rays, and B had approximately two years of experience, while C had approximately four years of experience. The rules of human annotation were set as follows: (1) drawing a minimum-sized bounding box of each tooth in the images, and (2) using the FDI numbering system. Besides, some ground truth annotations in the images of the train dataset were shown as examples to the dentists, from which they could learn how to do annotations. Any modification was allowed during or after each annotation, and the experimenter reviewed the annotations to observe and correct possible mistakes before final submission. The annotations by the dentists were matched with the ground truth data to calculate the precisions, recalls, and IOUs.