Study design and participants

In this work, we collected large-scale CBCT imaging data from multiple hospitals in China, including the Stomatological Hospital of Chongqing Medical University (CQ-hospital), the First People’s Hospital of Hangzhou (HZ-hospital), the Ninth People’s Hospital of Shanghai Jiao Tong University (SH-hospital), and 12 dental clinics. All dental CBCT images were scanned from patients in routine clinical care. Most of these patients need dental treatments, such as orthodontics, dental implants, and restoration. In total, we collected 4938 CBCT scans of 4215 patients (mean age: 38.4, with 2312 females and 1903 males) from the CQ-hospital, HZ-hospital, and SH-hospital as the internal dataset, and 407 CBCT scans of 404 patients from the remaining 12 dental clinics as the external dataset.

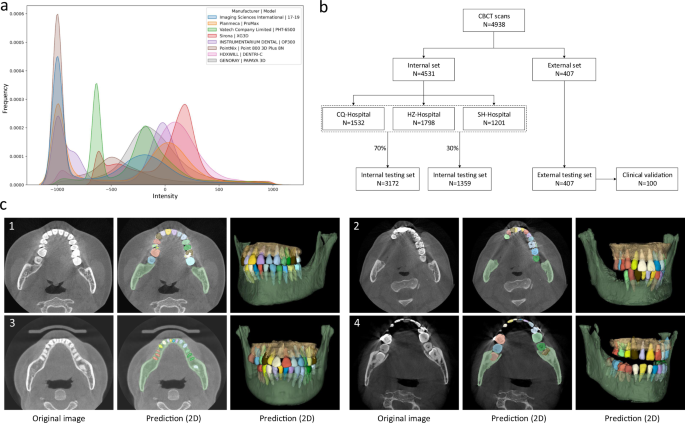

The detailed imaging protocols of the studied data (i.e., image resolution, manufacturer, manufacturer’s model, and radiation dose information of tube current and tube voltage) are listed in Table 1. To intuitively show the image style variations across different manufacturers caused by radiation dose factors (i.e., tube current, tube voltage, etc), we also provide a heterogeneous intensity histogram of the CBCT data collected from different centers and different manufacturers. As shown in Fig. 1a, we can find that there are large appearance variations across data, indicating necessity of collecting a large-scale dataset for developing an AI system with good robustness and generalizability. Besides the demographic variables and imaging protocols, Table 1 also shows data distribution for dental abnormality, including missing teeth, misalignment, and metal artifacts. Notably, as a strong indicator of clinical applicability, it is crucial to verify the feasibility and robustness of an AI-based segmentation system on challenging cases with dental abnormalities as commonly encountered in practice. To define the ground-truth labels of individual teeth and alveolar bones for model training and performance evaluation, each CBCT scan was manually annotated and checked by senior raters with rich experience (see details in Supplementary Fig. 1).

a The overall intensity histogram distributions of the CBCT data collected from different manufacturers. b The CBCT dataset consists of internal set and external set. The internal set collected from three hospitals is randomly divided into the training dataset and internal testing dataset. All 407 external CBCT scans, collected from 12 dental clinics, are used as external testing dataset, among which 100 CBCT scans are randomly selected for clinical validation by comparing the performance with expert radiologists. c Qualitative comparison of tooth and bone segmentation on the four center sets. The original CBCT images are shown in the 1st column, and the segmentation results in 2D and 3D views are shown in the 2nd and 3rd columns, respectively.

As shown in Fig. 1b, in our experiments, we randomly sampled 70% (i.e., 3172) of the CBCT scans from the internal dataset (CQ-hospital, HZ-hospital, and SH-hospital) for model training and validation; the remaining 30% data (i.e., 1359 scans) were used as the internal testing set. Moreover, to further evaluate how the learned deep learning models can generalize to the data from completely unseen centers and patient cohorts, we used the external dataset collected from 12 dental clinics for independent testing. To verify the clinical applicability of our AI system in more detail, we randomly selected 100 CBCT scans from the external set, and compared the segmentation results produced by our AI system and expert radiologists. Moreover, we also provide the data distribution of the abnormalities in the training and testing dataset. As shown in Supplementary Table 1 in Supplementary Materials, we can see that the internal testing set and the training set have similar distributions of dental abnormalities, as they are randomly sampled from the same large-scale dataset. In contrast, since the external dataset is collected from different dental clinics, the distribution of its dental abnormalities is a little different compared with the internal set. Notably, some subjects may simultaneously have more than one kind of abnormality. Overall, this proof-of-concept study can fully mimic the heterogeneous environments in real-world clinical practice.

Segmentation performance

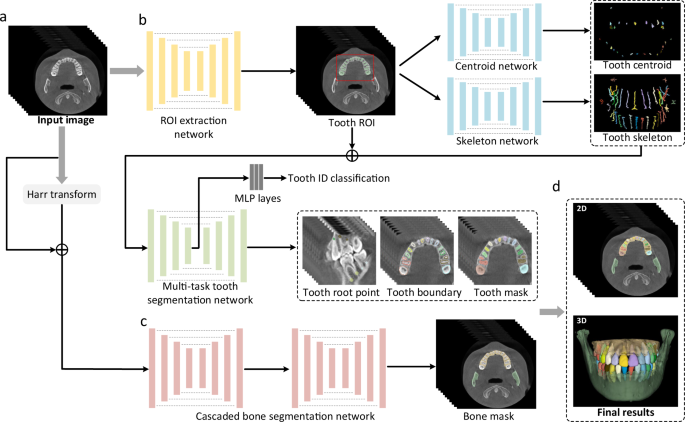

An overview of our AI system for tooth and alveolar bone segmentation is illustrated in Fig. 2. Given an input CBCT volume, the framework applies two concurrent branches for tooth and alveolar bone segmentation, respectively (see details provided in the “Methods” section). The segmentation accuracy is comprehensively evaluated in terms of three commonly used metrics, including Dice score, sensitivity, and average surface distance (ASD) error. Specifically, Dice is used to measure the spatial overlap between the segmentation result (R) and the ground-truth result G, defined as Dice = (frac{2left|Rcap Gright|}{left|Rright|+left|Gright|}). The sensitivity represents the ratio of the true positives to true positives plus false negatives. The distance metric ASD refers to the ASD of segmentation result (R) and ground-truth result G.

a The input of the system is a 3D CBCT scan. b The morphology-guided network is designed to segment individual teeth. c The cascaded network is used to extract alveolar bones. d The outputs of the model include the masks of individual teeth and alveolar bones.

Table 2 lists segmentation accuracy (in terms of Dice, sensitivity, and ASD) for each tooth and alveolar bone calculated on both the internal testing set (1359 CBCT scans from 3 known/seen centers) and external testing set (407 CBCT scans from 12 unseen centers). It can be observed that, on the internal testing set, our AI system achieves the average Dice score of 94.1%, the average sensitivity of 93.9%, and the average ASD error of 0.17 mm in segmenting individual teeth. The accuracy across different teeth is consistently high, although the performance on the 3rd molars (i.e., the wisdom teeth) is slightly lower than other teeth. This is reasonable, as many patients do not have the 3rd molars. Also, the 3rd molars usually have significant shape variations, especially on the root area. The accuracy of our AI system for segmenting alveolar bones is also promising, with the average Dice score of 94.5% and the ASD error of 0.33 mm on the internal testing set.

Results on the external testing set can provide additional information to validate the generalization ability of our AI system on unseen centers or different cohorts. Specifically, from Table 2 we find that our AI system achieves an average Dice of 92.54% (tooth) and 93.8% (bone), sensitivity of 92.1% (tooth) and 93.5% (bone), and ASD error of 0.21 mm (tooth) and 0.40 mm (bone) on the external dataset. It indicates that the performance on the external set is only slightly lower than those on the internal testing set, suggesting high robustness and generalization capacity of our AI system in handling heterogeneous distributions of patient data. This is extremely important for an application developing for different institutions and clinical centers in real-world clinical practice.

As a qualitative evaluation, we show the representative segmentation produced by our AI system on both internal and external testing sets in Fig. 1c, where the individual teeth and surrounding bones are marked with different colors. We find that, although the image styles and data distributions vary highly across different centers and manufacturers, our AI system can still robustly segment individual teeth and bones to reconstruct 3D model accurately.

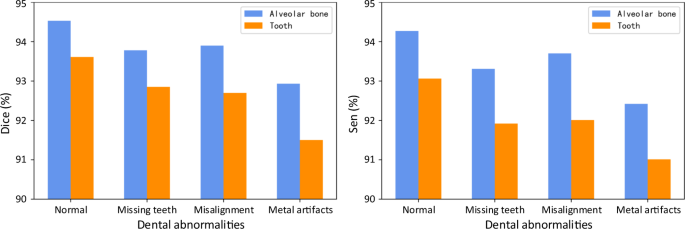

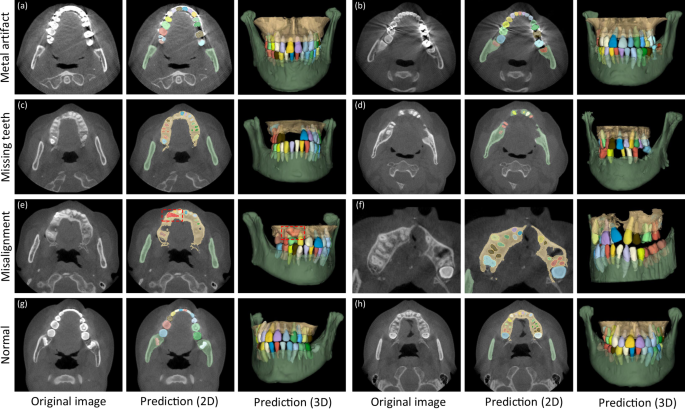

In clinical practice, patients seeking dental treatments usually suffer from various dental problems, e.g., missing teeth, misalignment, and metal implants. Accurate and robust segmentation of CBCT images for these patients is essential in the workflow of digital dentistry. Figure 3 presents the comparison between segmentation results (in terms of Dice score and sensitivity) produced by our AI system on healthy subjects and also the patients with three different dental problems. By regarding those results on the healthy subjects as the baseline, we can observe that our AI system can still achieve comparable performance for the patients with missing and misaligned teeth, while slightly reduced performance for the patients with metal implants (i.e., for the CBCT images with metal artifacts). Also, in Fig. 4, we visualize both tooth and bone segmentation results on representative CBCT images with dental abnormalities (Fig. 4a–f) and normal CBCT images (Fig. 4g, h). Although metal artifacts introduced by dental fillings, implants, or metal crowns greatly change the image intensity distribution (Fig. 4a, b), our AI system can still robustly segment individual teeth and bones even with very blurry boundaries. In addition, by observing example segmentation results for the CBCT images with missing teeth (Fig. 4c, d) and/or misalignment problems as shown in Fig. 4e, f, we can see that our AI system still achieves promising results, even for the extreme case with an impacted tooth as highlighted by the red box in Fig. 4e.

Segmentation performance of the CBCT scans with different dental abnormalities, including the Dice and the sensitivity.

Images with metal artifacts (a, b), missing teeth (c, d) and misalignment problems (e, f), and without dental abnormality (g, h).

Ablation study

To validate the effectiveness of each important component in our AI system, including the skeleton representation and multi-task learning scheme for tooth segmentation, and the harr filter transform for bone segmentation, we have conducted a set of ablation studies shown in Supplementary Table 2 in the Supplementary Materials. First, for the tooth segmentation task, we train three competing models, i.e., (1) our AI system (AI), (2) our AI system without skeleton information (AI (w/o S)), and (3) our AI system without the multi-task learning scheme (AI (w/o M)). It can be seen that AI (w/o S) and AI (w/o M) show relatively lower performance in terms of all metrics (e.g., Dice score of 2.3 and 1.4% on the internal set, and 1.4 and 1.1% on external set), demonstrating the effectiveness of the hierarchical morphological representation for accurate tooth segmentation. Moreover, the multi-task learning scheme with boundary prediction can greatly reduce the ASD error, especially on the CBCT images with blurry boundaries (e.g., with metal artifacts). Next, for the alveolar bone segmentation task, we compare our AI system with the model without harr filter enhancement (AI (w/o H)). Our AI system can increase Dice score by 2.7% on internal testing set, and 2.6% on external testing set, respectively. The improvements are significant, indicating enhancing intensity contrast between alveolar bones and soft tissues to allow the bone segmentation network to learn more accurate boundaries.

Comparison with other methods

To show the advantage of our AI system, we conduct three experiments to directly compare our AI system with several most representative deep-learning-based tooth segmentation methods, including ToothNet24, MWTNet27, and CGDNet28. Note that, ToothNet is the first deep-learning-based method for tooth annotation in an instance-segmentation fashion, which first localizes each tooth by a 3D bounding box, followed by the fine-grained delineation. MWTNet is a semantic-based method for tooth instance segmentation by identifying boundaries between different teeth. CGDNet detects each tooth’s center point to guide their delineation, which reports the state-of-the-art segmentation accuracy. Notably, all these three competing methods are designed solely for tooth segmentation, as there is no study in the literature so far for jointly automatic alveolar bone and tooth instance segmentation.

Considering that these competing methods are trained and evaluated with very limited data in their original papers, we conduct three new experiments under three different scenarios for comprehensive comparison with our method. Specifically, we train these competing models, respectively, by using (1) a small-sized training set (100 CBCT scans), (2) a small-sized training set with data argumentation techniques (100+ CBCT scans), and (3) a large-scale training set with 3172 CBCT scans. Corresponding segmentation results on the external dataset are provided in Supplementary Table 3 in the Supplementary Materials. From Supplementary Table 3, we can have two important observations. First, our AI system consistently outperforms these competing methods in all three experiments, especially for the case when using small training set (i.e., 100 scans). These results show the advance of various strategies we proposed. For example, instead of simply localizing each tooth by points or bounding boxes as used in these competing methods, our AI system learns a hierarchical morphological representation (e.g., tooth skeleton, tooth boundary, and root apices) for individual teeth often with varying shapes, and thus can more effectively characterize each tooth even with blurring boundaries using small training dataset. Second, for all methods (including our AI system), the data argumentation techniques (100+) can consistently improve the segmentation accuracy. However, compared with the large-scale real-clinical data (3172 CBCT scans), the improvement is not significant. This further demonstrates the importance of collecting large-scale dataset in clinical practice.

In summary, compared to the previous deep-learning-based tooth segmentation methods, our AI system has three aspects of advantage. First, our AI system is fully automatic, while most existing methods need human intervention (e.g., having to manually delineate foreground dental ROI) before tooth segmentation. Second, our AI system has the best tooth segmentation accuracy because of our proposed hierarchical morphological representation. Third, to the best of our knowledge, our AI system is the first deep-learning work for joint tooth and alveolar bone segmentation from CBCT images.

Comparison with expert radiologists

To verify the clinical applicability of our AI system for fully automatic tooth and alveolar bone segmentation, we compare its performance with expert radiologists on 100 CBCT scans randomly selected from the external set. We enroll two expert radiologists with more than 5 years of professional experience. Note that these two expert radiologists are not the people for ground-truth label annotation.

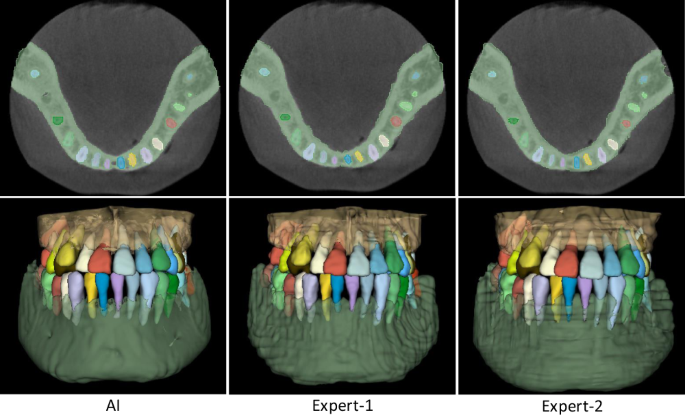

The comparison results are summarized in Table 3. It can be seen that, in terms of segmentation accuracy (e.g., Dice score), our AI system performs slightly better than both expert radiologists, with the average Dice improvements of 0.55% (expert-1) and 0.28% (expert-2) for delineating teeth, and 0.62% (expert-1) and 0.30% (expert-2) for delineating alveolar bones. Accordingly, we also compute corresponding p values to validate whether the improvements are statistically significant. The statistical significance is defined as 0.05. Specifically, for tooth segmentation, the paired p values are 2e−5 (expert-1) and 7e−3 (expert-2). And for alveolar bone segmentation, the paired p values are 1e−3 (expert-1) and 9e−3 (expert-2). All p values are smaller than 0.05, indicating that the improvements over manual annotation are statistically significant. Another observation is worth mentioning that the expert radiologists obtained a lower accuracy in delineating teeth than alveolar bones (i.e., 0.79% by expert-1 and 0.84% by expert-2 in terms of Dice score). This is because teeth are relatively small objects, and neighboring teeth usually have blurry boundaries, especially at the interface between upper and lower teeth under a normal bite condition. Also, due to the above challenge, the segmentation efficiency of expert radiologists is significantly worse than our AI system. Table 3 shows that the two expert radiologists take 147 and 169 min (on average) to annotate one CBCT scan, respectively. In contrast, our AI system can complete the entire delineation process of one subject within only a couple seconds (i.e., 17 s). Besides quantitative evaluations, we also show qualitative comparisons in Fig. 5 to check visual agreement between segmentation results produced by our AI system and expert radiologists. It can be seen that the 3D dental models reconstructed by our AI system have much smoother surfaces compared to those annotated manually by expert radiologists. These results further highlight the advantage of conducting segmentation in the 3D space (i.e., by our AI system) rather than 2D slice-by-slice operations (i.e., by expert radiologists).

Qualitative segmentation results produced by our AI system and two expert radiologists.

Clinical improvements

Besides direct comparisons with experts from both aspects of segmentation accuracy and efficiency, we also validate the clinical utility of our AI system, i.e., whether this AI system can assist dentists and facilitate clinical workflows of digital dentistry. To this end, we roughly calculate the segmentation time spent by the two expert radiologists under assistance from our AI system. Specifically, instead of fully manual segmentation, the expert radiologists first apply our trained AI system to produce initial segmentation. Then, they check the initial results slice-by-slice and perform manual corrections when necessary, i.e., when the outputs from our AI system are problematic according to their clinical experience. Therefore, the overall work time includes the time verifying and updating segmentation results from our AI system.

The corresponding results are summarized in Table 3. Without assistance from our AI system, the two expert radiologists spend about 150 min on average to manually delineate one subject. In contrast, with the assistance of our AI system, the annotation time is dramatically reduced to less than 5mins on average, which is ~96.7% reduction in segmentation time. A paired t-test shows statistically significant improvements with P1 = 3.4 × 10−13 and P2 = 5.4 × 10−15, with respect to the two expert radiologists, respectively. Also, it is worth noting that the expert radiologists accepted most of the fully automatic prediction results produced by our AI system without any modification, except only 12 out of the 100 CBCT scans requiring extra-human intervention. For example, the predicted tooth roots may have a little over- or under-segmentation. Additional refinements can make the dental diagnosis or treatments more reliable. Our clinical partners have confirmed that such performance is fully acceptable for many clinical and industrial applications, e.g., doctor-patient communications and treatment planning for orthodontics or dental implants, indicating the high clinical utility of our AI system.