In orthodontics, research on deep learning algorithms is being increasingly conducted. The well-known and promising topics include automated cephalometric landmark identification3,14,15, classification or diagnosis for treatment planning6,7,8,16,17, and tooth segmentation and setup using three-dimensional digital tools such as cone-beam computed tomography (CBCT) and scan data18,19.

In particular, DCNN algorithms demonstrating a robustness in medical image analysis are clinically helpful in reliable decision-making and obtaining an accurate diagnosis. Hence, in this study, the new DCNN-based AI model was developed and examined for sagittal skeletal classification using lateral cephalometric images. The extracted images including A-, B-, and N-points effectively helped the model training as part of pre-processing. When sampling the cephalometric images, all images with good resolution were included irrespective of dental prosthesis, implant, age, and even history of cleft lip and palate. The diverse images might be associated with higher performance of the current DCNN model compared with that of the other DCNN models from the earlier studies as well as the AI software6,8. Proper neural network depth might be another factor leading to better performance, as observed in this study4.

A class activation mapping (CAM) is fairly useful in visualizing the discriminative image regions when assessing the ROI used by the current DCNN models20. In this study, although the N-point was not indicated by the CAM, A- and B- points were commonly highlighted in the successfully classified images.

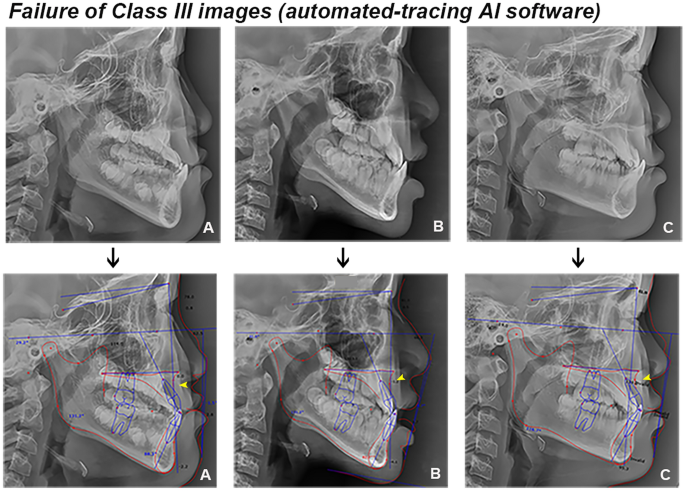

Meanwhile, regarding the automated landmark detection method, the success rate of detection has improved through the previous research21,22. Recently, Lee et al.23 reported a mean landmark error of 1.5 mm and a successful detection rate of 82% in the 2 mm range, and Hwang et al.13 highlighted detection errors < 0.9 mm compared to human results, indicating that automated detections were clinically acceptable. Despite these gradual improvements in the detection accuracy of AI, pin-pointing a particular landmark is not straightforward even for an experienced orthodontist. Specifically, the A- and B-points used in this study are in general well-known for being error-prone during detection. In a previous study on automated landmark identification, detection errors of 2.2 mm for the A-point and 3.3 mm for the B-point were higher than the mean value of 1.5 mm in all landmarks13. Yu et al2 also mentioned the difficulty in identifying the A-point of cephalometric analysis based on AI. In this study, the automated-tracing AI software often identified the two points erroneously, which likely led to the rather lower performance compared with the DCNN model. Furthermore, an interesting finding is that the sensitivity—the ability of a test to correctly identify the skeletal classification (true positive rate) —of the AI software on Class III images was far lower than that of other classes (Fig. 4 and Table 4). As presented in Fig. 6, the thicker lip soft tissue around the A-point in Class III patients likely led to more inaccurate identification of the landmark24,25, and this might be rather enhanced in patients with cleft lip and palate26. In this regard, compared with the AI software that pin-pointed the landmarks, the DCNN model with a larger ROI might show better performance in skeletal classification.

Examples of Class III images of automated-tracing AI software (arrow, erroneous detection of A-point).

Although it is challenging to compare these two AI models in a straightforward manner, it would be worth investigating the performances for precise diagnosis and decision-making. The newly developed image-based DCNN algorithm enables clinicians to directly achieve accurate diagnoses and predict treatment outcomes. Thus, it can provide valuable opinions with regard to decision-making and treatment planning without the time-consuming process of cephalometric landmarking and analyzing. Nonetheless, a precise analysis using the landmarks of cephalogram is critical to determine the degree of skeletal and dental discrepancy and obtain other informative measurements. In particular, some variables can be weighted to impact the orthodontist’s decision in treatment planning.

Although the current study has successfully investigated the DCNN-based AI model and compared the two AI models for skeletal classification, there is a limitation in the availability of heterogeneous learning data for the two respective AI algorithms. In addition, as mentioned by a previous study27, a combination of various measurements or variables leads to better performance in sagittal skeletal classification than using a single ANB angle. Therefore, orthodontic analysis is required in patients with sagittal, transverse, and/or vertical problems using multi-source data, such as facial and intraoral scan data, CBCT images, and demographic information, along with a more advanced algorithm model.

It would be interesting to investigate the performance of the DCNN model in predicting facial growth using cervical vertebrae maturation and/or hand-wrist radiographs and to further evaluate the relationship between the predictions.