Articles identified from the search

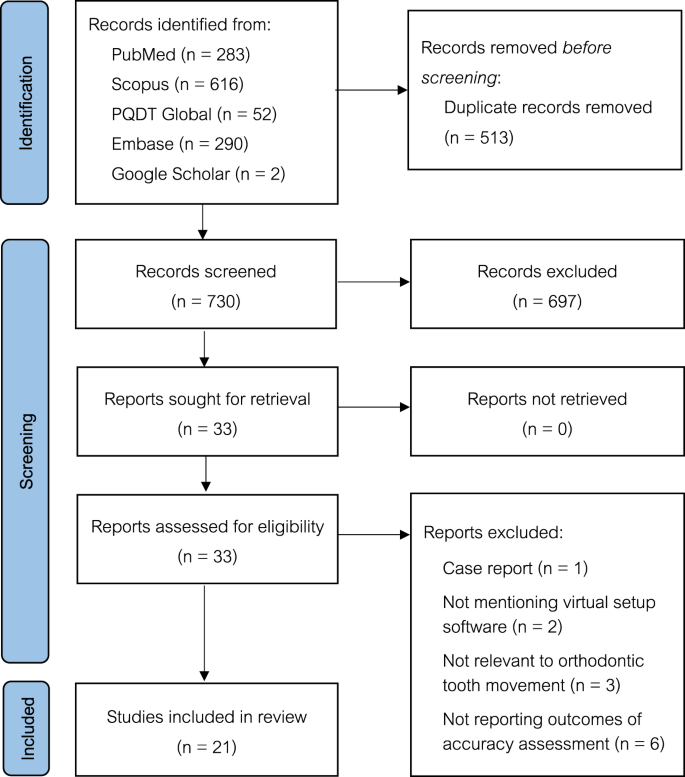

As presented in the PRISMA flow chart for study selection (Fig. 1), the electronic searches revealed 1241 articles from the four databases (PubMed = 283, Scopus = 616, Embase = 290, and PQDT Global = 52), and two studies were identified from Google Scholar. There was no additional research identified from the reference lists of included articles. After 513 duplicates were removed, 730 titles and abstracts were screened with consideration of the inclusion and exclusion criteria. Following the initial screen, 33 articles were selected for a full-text review, and 12 of them were excluded due to being a case report, no virtual setup software mentioned, no comparison of virtual setup and other techniques, being not relevant to orthodontic tooth movement, or no outcomes of accuracy assessment reported. Consequently, 21 full-texts were included in this systematic review.

PRISMA 2020 flow diagram of the article selection process.

Quality of the articles included in this review

When evaluating the strength of included evidence with SBU, there were three article of prospective clinical trials that could be considered as a high value of evidence (Grade A) [34,35,36]. The other included articles appeared to be of moderate value of evidence, as they were retrospective studies. Therefore, the overall level of evidence of this systematic review was considered as strong, with three article of Level ‘A’ evidence and the other studies of Level ‘B’. According to ROBINS-I assessment, all included articles were evaluated as low or moderate risk of bias for all domains, so all of them were interpreted as moderate risk of bias. Although no included research was considered as high quality (low risk of bias), the quality of included evidence was not considered as problematic, as nearly all of them were non-randomized studies. Therefore, the risks of bias were mostly from confounding factors of research designs, such as different setup providers [35, 37, 38], no mention of a setup provider [35, 39,40,41,42], varying degree of malocclusion at beginning of the treatment [39, 42,43,44,45], and presence of any additional mechanics [44,45,46,47]. Also, the researchers who assessed the outcomes were not blinded in several articles [25, 34,35,36,37,38, 40,41,42,43,44,45, 47,48,49,50,51,52]. Test-retest reliability was not performed to confirm the reproducibility and reliability of the measurement in five articles [25, 34, 40, 41, 45]. Only one article performed an interrater reliability to confirm the consistency between the two assessors [44].

Study design of included articles

Most of the included studies were non-randomized retrospective studies (n = 17), with exception of one prospective randomized clinical trial [34], two prospective non-randomized clinical research [35, 36], and one retrospective randomized research [39]. The sample size of included articles varied from ten to ninety-four samples, presenting various types of orthodontic problems were included ranging from mild to severe malocclusion. Out of twenty-one studies included in this systematic review, there were three articles comparing the treatment outcomes between manual and virtual setup [37, 39, 48]. The outcomes of virtual setup and actual treatment were compared in 18 articles, where the accuracy of virtual setup in clear aligners were evaluated in ten studies [25, 34,35,36, 38, 40, 41, 43, 49, 50] while eight research evaluated its accuracy in fixed orthodontic appliances [42, 44,45,46,47, 51,52,53].

Virtual setup software used in included articles

There were a number of software used for virtual setup as reported in the included articles. ClinCheck appeared to be the most popular software used in six articles [25, 34, 35, 40, 43, 49], followed by OrthoAnalyzer in five studies [37,38,39, 44, 47], SureSmile in three papers [42, 45, 53], and Maestro 3D [50, 51]. Other tooth movement simulations were 3Txer [48], Airnivol [36], Flash [25], OrthoDS 4.6 [41], eXceed software [52], and uLab [46], where each of them was included in only an article. In addition, there were four studies, implementing cone-beam computed tomography systems (CBCT) to tooth simulation software, in order to provide more precise information with a reference to the face and skull of patients [44, 47, 49, 51].

Outcome measurements

To measure the accuracy of tooth movement simulation, the treatment outcomes of virtual setup were compared with manual setup or actual treatment, where the differences between two approaches were compared in terms of linear intra-arch, interarch dimensions, and angular dimension. The comparisons were performed by digital software measurement [36,37,38, 40, 46, 50, 53], manually handed measurement [39, 48], or superimposition with a best-fit method [25, 34, 35, 41,42,43,44,45, 47, 49, 51, 52]. Seven included studies have clearly defined the threshold values of tooth movement discrepancies between virtual setup or actual treatment in reference to the American Board of Orthodontics (ABO) model grading system [25, 34, 42, 43, 45, 47, 52]. Thus, clinically significant discrepancies were set at over 0.5 mm for linear movements and over 2 degrees for angular movements in these articles. However, Smith et al. [53] set a discrepancy of 2.5 degrees of tooth tip and torque to be clinically acceptable variation for tip and torque.

Accuracy of virtual setup

The accuracy of tooth movement simulations can be categorized into three groups, depending on the interventions that virtual setup was compared with, which were: (1) the accuracy of virtual setup in simulating treatment outcomes compared with manual setup, (2) the accuracy of virtual setup in simulating treatment outcomes of clear aligner treatment, and (3) the accuracy of virtual setup in simulating treatment outcomes of fixed appliance treatment.

The accuracy of virtual setup in simulating treatment outcomes compared with manual setup

There were three articles comparing treatment outcomes between virtual and manual setup [37, 39, 48]. Two articles supported the accuracy of tooth movement simulation using OrthoAnalyzer and 3Txer software [37, 48], as virtual and manual setups provided comparable measurements of treatment outcomes. However, there was an article reporting that there were statistically significant differences in tooth movement simulation between the virtual and conventional setups [39], where the printed virtual setup was less accurate than conventional setup with small accuracy differences from printing technology, tooth collision and software limitations. The data of the included articles in this group were extracted in Table S1.

The accuracy of virtual setup in simulating treatment outcomes of clear aligner treatment

There were ten articles comparing treatment outcomes between virtual and aligner treatment [25, 34,35,36, 38, 40, 41, 43, 49, 50], where the patients included in all of these studies were non-extraction and non-surgical cases. ClinCheck was the most popular software used for clear aligner prediction [25, 34, 35, 40, 43, 49], and other virtual setups were Flash [25], OrthoAnalyzer [38], OrthoDs 4.6 [41], Airnivol [36], and Masetro 3D [50]. There appeared to be discrepancies between tooth movement simulations from these virtual setups and actual treatment outcomes.

All included studies demonstrated statistically significant differences between predicted and achieved tooth positions [25, 34, 35, 40, 43, 49]. The accuracy seemed to be higher in linear dimensions compared to angular dimensions [25, 34] and in transverse direction compared to vertical and sagittal directions [35, 49]. The most precisely predictable tooth movement was tipping movement especially in maxillary and mandibular anterior teeth, followed by torque and rotation [36, 38, 41, 50]. Sorour et al. [25] also compared ClinCheck and Flash and found no clinically statistically differences in accuracy and efficacy between Invisalign or Flash aligner systems. The data of the included articles in this group were extracted in Table S2.

The accuracy of virtual setup in simulating treatment outcomes of fixed appliance treatment

There were eight articles comparing treatment outcomes between virtual setups and fixed appliance treatment [42, 44,45,46,47, 51,52,53]. The tooth simulation software used in these articles included SureSmile [42, 45, 53], OrthoAnalyzer [44, 47], uLab [46], Maestro 3D [51], and eXceed [52]. The patients in these studies had more severe orthodontic problems than those of the comparison between virtual setups and clear aligner treatment, as five articles considered extraction cases [42, 45, 46, 52, 53], while three articles evaluated orthodontic treatment combined with orthognathic surgery [44, 47, 51]. There was only one article reporting that an indirect bonding technique was performed for orthodontic bracket placement [45].

The degrees of accuracy were various depending on the software, tooth position, and types of tooth movement. SureSmile appeared to be more accurate in mesiodistal and vertical directions than buccolingual position, and there seemed to be clinically significant discrepancies in angular movements (tip and torque) of nearly all teeth [45, 53]. Its highest precision could be expected for translational and rotational movements of incisor teeth, where the accuracy decreased from anterior to posterior areas [42]. Research in OrthoAnalyzer also demonstrated the similar degree of accuracy to SureSmile. Although there were statistically significant discrepancies in tooth movement, clinically significance was not found, resulting its potential for treatment plan discussion [44]. However, it could be considered as less accurate in more complicated cases especially in rotational and translational directions [47]. Research in uLab [46], Maestro 3D [51], and eXceed [52] also presented statistically significant discrepancies in tooth movement simulation, however they could be used for the purposes of treatment planning and outcome visualization due to acceptable clinical discrepancies. The data of the included articles in this group were extracted in Table S3.