Data set and image pre-processing

We collected transverse and longitudinal panoramic images of patients who visited the Daejeon Wonkwang University Dental Hospital. All panoramic images obtained between January 2020 and June 2021 were randomly selected. When multiple images were available for a patient, the initially obtained image was chosen. Exclusion criteria involved images with unsuitable image quality, as determined by the consensus of three oral and maxillofacial radiologists.

A total of 9663 panoramic radiographs were selected (4774 males and 4889 females; mean age 39 years 3 months). Panoramic images were obtained using three different panoramic machines: Promax® (Planmeca OY, Helsinki, Finland), PCH-2500® (Vatech, Hwaseong, Korea), and CS 8100 3D® (Carestream, Rochester, New York, USA). Images were extracted using the DICOM format.

The age of the acquired data ranged from 3 years 4 months to 79 years 1 month (Table 1). Because the amount of data for each age group differed and may adversely affect the results if used randomly, the amount for each age group was divided by a 6:2:2 ratio to balance the data among the training, validation, and test sets. Thus, 5861 training, 1916 validation, and 1886 test data were used.

The edge of the image was cropped to focus on the meaningful region and filled with zero padding around the image. Additionally, because the image sizes obtained from the two devices were different (2868 × 1504 pixels and 2828 × 1376 pixels), the images were resized to the same size (384 × 384 pixels) for batch learning and to improve learning speed.

To learn more effectively with the acquired data, augmentation techniques using normalization, horizontal flip with a probability of 0.5, and color jitter were applied to the training set.

Architecture of deep-learning model

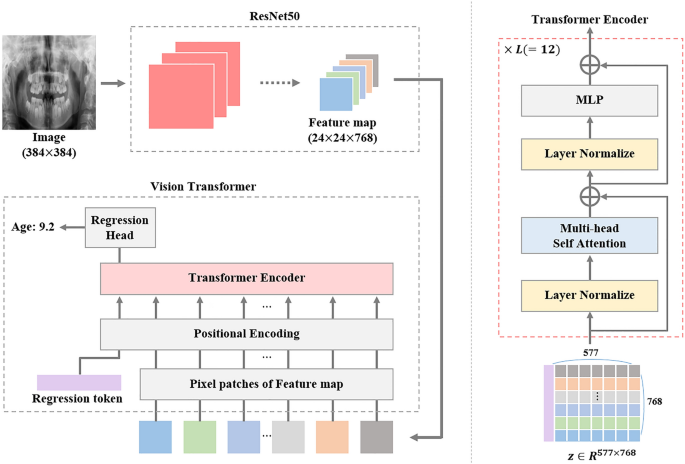

We used two types of age estimation models. The first is ResNet, a well-known CNN-based model which has been used as a feature extractor in many studies related to age prediction24,25. ResNet can build deep layers by solving the gradient vanishing problem through residual learning using skip connection26. However, because the model has a locality inductive bias, relatively less global information is learned than the local features. The other is the ViT23, which uses a transformer27 encoder and lacks inductive bias compared with CNN-based models. However, by performing pre-training on large datasets such as ImageNet21k, it overcomes structural limitations. The model has a wide range of attention distances that can learn the global information and local features. Additionally, the model also exhibited better classification performance than CNN-based models. Using the strengths of these two models, we propose an age prediction model based on ResNet50-ViT23, a hybrid method that can effectively learn the global information which better understands the overall oral structure and local features that distinguish fine differences in teeth or periodontal region.

The overall architecture of the proposed model is presented in Fig. 1. The feature map (mathbf{x}in {R}^{Htimes Wtimes C}) extracted by placing the panoramic image into ResNet50 was used as the input patch for the transformer, where ((H, W)) are the height and width of the feature map, respectively, and (C) is the number of channels. We define (HW(=N)) as the total number of patches because each pixel in the feature map is considered a separate patch.

Proposed architecture of age estimation model.

To retain the positional information of the extracted feature map, we add a trainable positional encoding ({{varvec{x}}}_{{varvec{p}}{varvec{o}}{varvec{s}}}in {R}^{(N+1)times C}) to the sequence of feature patch: ({varvec{z}}=[{x}_{reg};{x}_{1};{x}_{2};…;{x}_{N}]+{{varvec{x}}}_{{varvec{p}}{varvec{o}}{varvec{s}}}) where ({x}_{reg}in {R}^{C}) is trainable regression token, and ({x}_{i}) is (i) th patch of the feature map.

Then, the ({varvec{z}}) is entered into the transformer encoder blocks composed of the layer norm (LN)28, multi-head self-attention (MSA)23,27, and multilayer perceptron (MLP), which contains two linear layers with a Gaussian Error Linear Unit (GELU) function. The transformer encoder process is as follows:

$$overline{z}^{l} = MSAleft( {LNleft( {z^{l} } right)} right) + z^{l} ,{ }l = 1,2, ldots ,L$$

$$z^{l + 1} = MLPleft( {LNleft( {overline{z}^{l} } right)} right) + overline{z}^{l} ,{ }l = 1,2, ldots ,L$$

where (l) denotes the (l) th transformer encoder block. Finally, we estimated the age from the regression head using ({z}_{reg}^{L+1}).

Learning details

To train the model efficiently, we employed transfer learning, which aids in overcoming weak inductive bias and improving accuracy. That is, we initially set the parameters of the models using weights pre-trained using ImageNet21k and then fine-tuned using our panoramic-image dataset. The models were trained with a stochastic gradient descent (SGD) optimizer with a momentum of 0.9, learning rate of 0.01, and batch size of 16; for 100 epochs, the objective function was the mean absolute error (MAE). After training on the training set at every epoch, we performed an evaluation using the validation set. When the training was completed, the weight parameter of the model with the best MAE in the validation set was stored.

Ethical approval and informed consent

This study was conducted in accordance with the guidelines of the World Medical Association Helsinki Declaration for Biomedical Research Involving Human Subjects. It was approved by the Institutional Review Board of Daejeon Dental Hospital, Wonkwang University (W2304/003-001). Owing to the non-interventional retrospective design of this study and because all data were analyzed anonymously, the IRB waived the need for individual informed consent, either written or verbal, from the participants.